Proactive Data Security

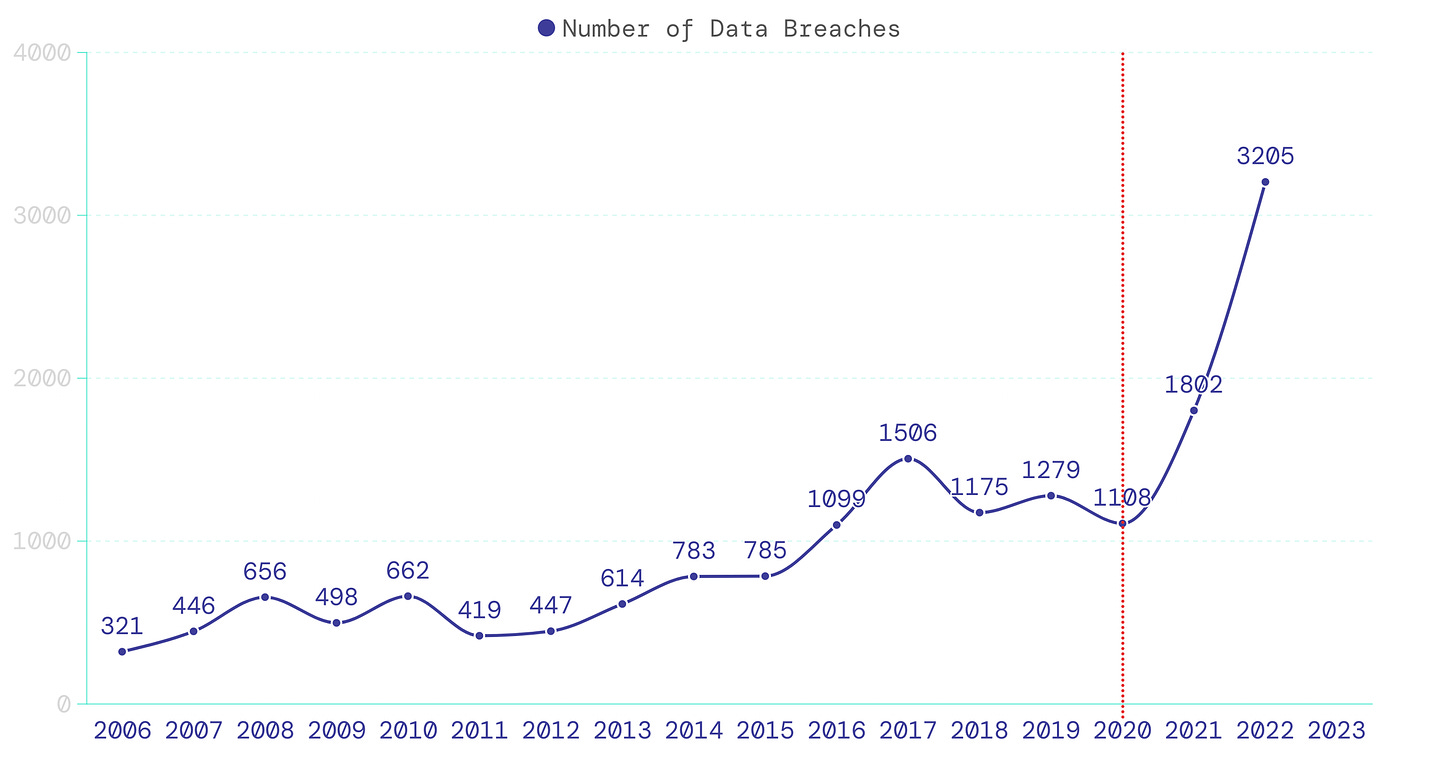

While data breaches are not new, something significantly changed in 2020.

The number of breaches increased significantly post 2020, primarily because businesses rapidly adopted digital solutions in response to COVID-19 but lacked cybersecurity preparedness.

In 2023 alone, data breaches affected 353 million individuals. That's a staggering 6.6% of the world's internet population. It is not unknown that the impact on individuals doesn't stop here; consumers continue to face numerous financial scams, identity thefts, and more (checkout this interactive graphics for more details)

Unsurprisingly, the trend of increased breaches positively correlates with the spending on cybersecurity initiatives. In 2024, companies will spend up to $215 billion on upgrading their cybersecurity posture.

What are companies spending on?

When it comes to cybersecurity, there are broadly two implementation approaches: monitoring tools (which are reactive) and security-by-design tools (which are proactive). While each alone is insufficient, I want to highlight the growing need to focus on implementing security-by-design proactive approaches.

The article is mainly for practitioners and leaders in the Software Engineering, Infrastructure, and Security teams. Given the breadth of areas to cover, I do not intend to analyze each tool or capability in-depth – maybe for a follow-up blog.

Over-Reliance on monitoring tools

The premise of monitoring tools as the be-all-tool is massively oversold. Companies prefer buying or building monitoring tools over solving this problem at the core.

There are a few reasons why:

One is the incentive misalignment between the Security and Engineering teams. The security team must prevent security breaches at all costs, whereas the engineering team must release new features to production as fast as possible.

The CAP theorem equivalent for engineering productivity is f(Quality, Speed, Amount of work); you can only choose two.

What makes matters worse is that engineers do not empathize enough with their security counterparts in most organizations. Security members are treated almost as villains who get sadistic pleasure by blocking their features on release day!

On the other hand, given the criticality of preventing any breach, security folks have historically enjoyed very high budgets to do what it takes to build a substantial war chest against attacks.

Thus, monitoring tools become the perfect drug. You achieve the desired security visibility without using engineering cycles. And if you have the budget for it, it's a match made in heaven.

Monitoring is a lagging Indicator

The biggest problem with relying on monitoring tools alone is that these tools are a lagging indicator of a problem. These tools usually operate on aggregate data to detect a statistically significant anomaly. Lagging indicators that operate on aggregated data are risky to rely on.

I will take a detour and explain my real experience with a lagging aggregate indicator. This happened in a domain other than security, so please bear with me.

In one of my previous project, we developed a data platform feature that tracked daily, weekly, and monthly active users. The challenge was that many users were anonymous, requiring us to use fingerprinting techniques for identification. These methods were unreliable five to seven years ago, especially on web browsers.

Our analysis and reporting relied heavily on these user counts. At one point, a bug in our fingerprinting code, affecting a popular browser, led to incorrect user counts.

This situation resembled a salami attack, where small inaccuracies in user counts initially seemed insignificant but compounded over time to become substantial. During this period, decisions were made based on these inaccurate counts, and subsequent decisions were based on the initial ones. The delay in detecting this anomaly made it challenging to reverse the damage.

Another problem with monitoring tools is the net accuracy. It is challenging for these monitoring tools to achieve 100% accuracy.

For instance, we deployed a secret scanner in one of my previous companies. The scanner would scan our codebase and other filesystems to detect any potential secret leak. Given the massive size of our organization's codebase, each scan would return ~10,000 "potential" leaked secrets. You have no clue about how many of those are True Positives. Eventually, there's a time when you stop running these exercises until, unfortunately, a secret leaks.

Two metrics further validate the impact of a lagging indicator:

time_to_contain_breachtime_to_exploit

On average, a typical organization takes 204 days to contain a data breach fully.

On the other hand, attackers take only 32 days to exploit a vulnerability. What is worrying is that this number is expected to decrease significantly as we see more AI adoption. Nikesh Arora, CEO of Palo Alto Networks, articulated this thought very well.

These two metrics clearly show that relying solely on a lagging metric for cybersecurity is not a wise choice.

Case for more proactive measures

I am not the first to propose proactive measures (also referred as shift-left); however, to my surprise, every 3 in 4 companies I interviewed were yet to invest significantly in such capabilities.

A company recently acquired for double-digit billions of dollars managed 100+ API keys in a spreadsheet. According to their DevOps lead, it was alright because the spreadsheet had access to only four team members.

The LastPass incident continues to teach us the perils of high reliance on humans for security.

If not all, there are a few areas where I strongly feel that securing-data-by-design is a winning strategy; these include understanding:

Why & What you collect?

Where & How you store?

Why, How, & Who accesses?

Why & Where you share?

Let's dive into each.

Why & What data you collect?

Knowing your data is the first step to securing your data.

As engineers & product managers, we often have a free hand in collecting & using data to solve business outcomes. We are biased in doing whatever improves the customer experience. An engineer working on Personalisation will include the user's gender, preferences, etc., to improve recommendations. To enhance transaction success, a fraud-risk product manager will include the user's location, age, etc.

Is that wrong? Certainly not. However, if you do not ask why every time, the engineer will start using the user's race, or the product manager will start "securely" reading the user's SMS data to improve their model's efficiency by 0.1%!

Ironically, knowing your data is often an afterthought. Months after collecting, using, and sharing data, organisations decide to set up Data Cataloging tools to visualise data usage.

I often visualise these decisions as reverse onion peeling. You start with the onion core and layer a peel whenever you decide. If you do not evaluate your decisions carefully, several decisions and peelings later, you realize this is not the onion you wanted.

A simple approach is to add a checklist item to your PR or PRD to review whether consumer data is being used or processed and, accordingly, reason the need.

Additionally, data cataloging & observability tools such as Amundsen, DataHub, OpenMetadata, AccelData, Atlan, etc., along with data scanning tools such as PII Catcher, OctoPII (for images, documents, etc.) should be explored.

Where & How are you storing?

Knowing your data significantly reduces the problem space.

Once you know your sensitive data, you must always store it separately from the rest of your data. Separating the data further reduces the problem space, allowing you to implement stricter access & governance policies on the isolated data.

This separation must ideally occur at the left-most perimeter of your infrastructure – as early as possible once the data reaches your backend.

There are a few approaches.

A simple approach is to take this decision at an API handler or ORM layer. Once the data reaches your application code, you can set up rules to route the sensitive data to a secure store, while the remaining data can be stored in your regular datastore.

Another approach is to separate at an API gateway layer. VGS has a reference architecture for this pattern. While this option is more foolproof, it is complex to implement. Additionally, the application context spills over to the infrastructure layer in this approach; however, the centralized approach ensures higher separation efficacy.

Once the sensitive data is sniffed away and stored separately, the rest of the entities can refer to it using their tokenized identifiers. Tokenization is a mature concept widely used in the payments industry. AWS has a blog that you can refer to implement tokenisation.

You can explore a few commercial solutions in this space, such as SkyFlow, Very Good Security, HashiCorp Vault, etc.

Why & Who is accessing data?

Once we identify and securely store sensitive data, we must restrict access.

We mostly worry about humans having access to data. It is fair in many ways because human error is one of the leading reasons for data breaches.

However, do you know that on average, organizations have 45 machine identities to manage for every 1 human? And this gap is widening very fast.

This makes it crucial to know and govern which machine has access to what data and with what privileges. The machine here can refer to any compute resource that runs your application code, scripts, bots, etc.

Securing access requires you to implement the following:

A secure way to generate, store, and use credentials (Passwords, API keys, Tokens, Service Accounts, etc.).

A proactive review of permissions granted to every credential

Companies are often nonchalant about identity and access governance, particularly with non-human identities.

Secret Manager is one of the first tools you must set up. However, with the increased adoption of third-party vendors in the stack, there is a rising need to implement operational controls, such as how the credentials are shared with the runtime, how to prevent a human from accessing a credential, how to rotate credentials periodically, and so on

Beyond secret managers, ensuring that no human has a long-lived access to any credential has become critical. Numerous options are available today to implement secure access channels for humans.

Tools such as P0, ConductorOne, and others have made it extremely straightforward to set up just-in-time access control.

Further, multiple Privilege Access Manager (PAM) tools improve audibility and inject rich governance into access.

A thing to note is that organizations often have variable success setting up PAM tools on production, given the implementation complexity; however, having a secrets manager (with operational automation) and a just-in-time access provisioner must be the first things to explore as you start the journey.e

Why & Where is data shared?

Despite setting up enough access guardrails, employees will likely share sensitive data with third parties for legitimate reasons. For instance, sharing customer data on CRMs, internal conversations on Slack, customer issues on Jira, etc.

Unchecked growth will cause problems:

Of the CISOs I interviewed, every two out of four knew only a small subset of all vendors in use.

Few to no guardrails control how and what consumer data humans or programs share externally.

Companies without a legal team do not review Data Processing Agreements before signing up vendors.

In startups & smaller companies, engineers often have a free hand in trying and adopting new SaaS vendors that, many times, may not be compliant.

Knowing where you share data is essential because this data is out of your control. If the vendor does not handle the data carefully, your consumers' data will be vulnerable.

While there are tools that scan for consumer data leaked to vendors, I would only rely partially on them since these scans are fuzzy and depend a lot on the underlying vendor's APIs.

The obvious starters are setting up a vendor onboarding process, single-sign-on tools, etc., but as your infrastructure and business mature, you must increasingly invest resources in setting up a zero-trust architecture; as a start, SASE for human access.

Conclusion

🫡 Collecting consumer data is a responsibility, not a privilege.

As our digital footprint expands, so does the number of internet users. Unfortunately, many users lack an understanding of how much sensitive data is collected about them, and therefore do not raise objections. Even a slight mishandling of this data can lead to severe consequences for our consumers.

Often, the development of security capabilities is deferred until a company hires its first CISO or reaches a critical milestone, such as acquiring the first enterprise customer or expanding into a new geographic market. This delay is typically attributed to a perceived lack of security skills and focus within the organization. The problem with this approach is the extensive migration work required later, which often turns into a game of catch-up with newer software releases.

While adopting secure-by-design practices may seem daunting initially, the approach yields compounding benefits as it becomes woven into the fabric of your everyday processes.

Thank you, Shadab for reviewing the post.